Local k8s testing infra

k8s cluster (1 master + X nodes )

Current config:

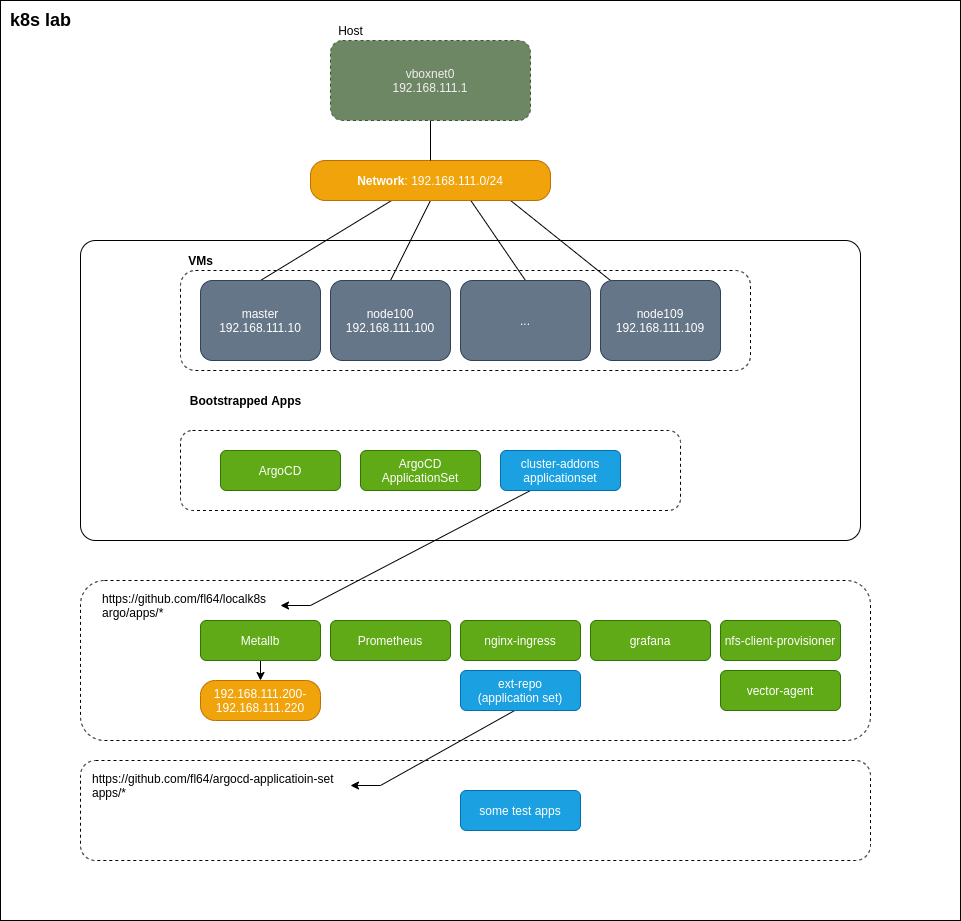

This lab is designed for local testing different k8s features and deployment with ArgoCD.

All you need is: Vargant, VirtualBox and some time to deploy it.

The K8s cluster in its basic configuration consists of two virtual machines: master and node, if you want more nodes you can change default_node_count var, or create a lab with the K8S_NODE_COUNT=X vagrant up command.

Once the cluster is installed and configured, Argo comes in and installs all the applications from the argo/app directory for the current repository.

ansible-galaxy collection install community.generalansible-galaxy collection install ansible.posixansible-galaxy collection install community.kubernetes

# up VMs and run ansible playbookvagrant up# run playbooks for specified ansible tagsK8S_TAGS=common vagrant up# ssh to VMsvagrant ssh mastervagrant ssh node100etc...# how to get to UI with `k port-forward`## how to get into the Argo UIk port-forward service/argocd-server -n argocd 8080:80browse to http://localhost:8080## how to get into the Prometheus UIk port-forward service/prometheus-server -n prometheus 9090:80browse to http://localhost:9090## how to get into the Prometheus UIk port-forward service/grafana -n grafana 3000:80browse to http://localhost:3000# Another way with ingress and /etc/hosts## Add local records to /etc/hostssudo bash -c "cp /etc/hosts /etc/hosts.backup && export HOSTS_PATCH=\"$(kubectl get svc -n ingress-nginx ingress-nginx-controller -o jsonpath=\"{.status.loadBalancer.ingress[0].ip}\") grafana.k8s.local argo.k8s.local prom.k8s.local\"; grep -qF \"${HOSTS_PATCH}\" -- /etc/hosts || echo \"${HOSTS_PATCH}\" >> /etc/hosts"## Browsehttps://grafana.k8s.localhttps://argo.k8s.localhttps://prom.k8s.local## default login and pass everywhere: admin/password# clean upvagrant down

cat <<EOF >> ~/.bashrcsource <(kubectl completion bash)source <(kubeadm completion bash)alias k=kubectlcomplete -F __start_kubectl kexport do="--dry-run=client -oyaml"EOFsource ~/.bashrc

cat <<EOF >> ~/.vimrcset numberset etset sw=2 ts=2 sts=2EOF

k port-forward service/hubble-relay -n kube-system 4245:80 &hubble observe --verdict DROPPED -f

kubectl krew install access-matrixkubectl krew install view-utilizationkubectl krew install view-webhookkubectl krew install example

kubernetes-homelab: https://github.com/lisenet/kubernetes-homelab

[x] move to containerd